Whenever I look at the stats for this modest blog, I always notice the same pattern. The number of visits aligns perfectly with the Pareto principle: 20% of our posts generate 80% of our page views. Of that 20%, the majority discuss how to calculate the size of a representative sample in order to conduct an opinion poll.

Given the apparent interest in this topic, today we are launching a series of posts on sampling: what it is, different sampling methods, when it’s useful to use one method or another, and so on. We hope that this information will be useful to students, to stats enthusiasts, and to professionals whose statistical expertise is a little rusty.

WHAT IS SAMPLING?

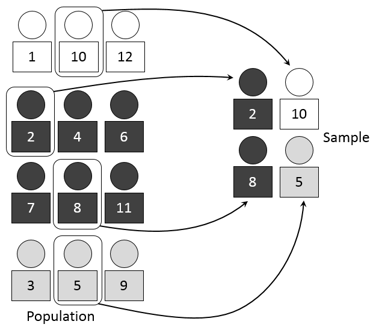

Sampling is the process of selecting a group of individuals from a population in order to study them and characterize the population as a whole.

It’s a pretty simple idea. Let’s say we want to know something about a population—the percentage of people in Mexico who smoke, for example. One way to go about this would be to call up everyone in Mexico (122 million people) and ask them if they smoke. The other way would be to get a subgroup of individuals together (1,000 people, for example) and ask them if they smoke, and then use this information as an approximation of the information we really want. This group of 1,000 people who make it possible for us to understand the behavior of Mexicans in general is called a sample, and the way we select them is called sampling.

In the above definition, we used two new terms that will be essential throughout this series of posts:

- Population (sometimes called a universe): All of the individuals that we want to study or characterize. In the example above, our sample is the population of Mexico, but we could talk about any sort of population, however broad or specific. For example, if we want to know how much Mexican smokers smoke on average, our population would be “smokers in Mexico.”

- Sample: This is the group of individuals in the population that we select to study—through a survey, for example.

WHY DOES SAMPLING WORK?

Sampling is useful because we can pair it with an inverse process known as generalization. To understand a population, the steps we follow are: (1) select a sample from the population, (2) measure certain data or an opinion for all individuals in the sample and (3) project the result we observe in the sample onto the population. This projection or extrapolation is called generalization of results

Generalization of results necessarily adds a certain degree of error to those results. Imagine that we asked a random sample of 1,000 Mexicans if they smoke, and 25% say that they do. If 25% of 1,000 randomly selected Mexicans smoke, simple logic would suggest that we would get about the same result if we asked all 122 million Mexicans. Since we chose our sample at random, however, it’s possible that we chose a higher proportion of smokers than that of the population. It's also possible that smokers are underrepresented in our sample. Random sampling might give us a different percentage than the actual percentage—maybe 25.2% of the population smokes, for example. Generalization of results from a sample, then, means accepting a certain amount of error, as illustrated below.

Fortunately, we can use statistics to quantify the error inherent in generalizing from a sample to a population. We do this using two parameters: the margin of error, which is the maximum difference we’d expect between the data we observe in the sample and the actual data in the population, and the confidence level, which is our degree of certainty that the real data for the population falls within our margin of error.

If, for our hypothetical study, we ask a sample of 471 Mexicans if they smoke, our results will have a margin of error of ±5% with a confidence level of 97%. This is the proper way of expressing results when sampling.

SAMPLE SIZE

What sample size do I need to study a given population? That depends on the size of the population and on the amount of error that you’re ready to accept, as we explain in this post. Greater precision will require a larger sample. If you want absolute certainty in your results, down to the last decimal, your sample has to be as big as your population.

But sample sizes have one key characteristic that explains why sampling is popular in so many fields: as we study larger populations, the necessary sample size represents a diminishing percentage of that population.

There’s a didactic explanation of this phenomenon at Gaussianos.com, an interesting blog on mathematics (in Spanish). Imagine we’re conducting a survey to learn a certain percentage of something (let’s stick with the percentage of smokers) with a predetermined degree of error—a margin of error of 5% with a confidence level of 95%, for example. If the population to be studied consisted of just 100 people, we would need a 79.5-person sample (that is, 79.5% of the population, a pretty sizable chunk of the whole population). If the population consisted of 1,000 people, we would need a 277.7-person sample (27.7% of the population). If the population consisted of 100,000 people, we would need a 382.7-person sample (3.83% of the population).

As you can see, the necessary sample size does increase with the size of the population, but not proportionally. The rate of increase tapers off as the sample represents a smaller and smaller percentage of the total population. In fact, once the population reaches a certain size (around 100,000 individuals), the sample size needn’t grow any more. Check out the table below for a few examples:

Sample size necessary for a 5% margin of error with a 95% confidence level.

| Population |

Necessary sample size |

% |

| 10 | 10 | 100% |

| 100 | 80 | 80% |

| 1.000 | 278 | 27,8% |

| 10.000 | 370 | 3,7% |

| 100.000 | 383 | 0,38% |

| 1.000.000 | 384 | 0,038% |

| 10.000.000 | 385 | 0,004% |

| 100.000.000 | 385 | 0,0004% |

Based on the information above, we know that however large the population, with 385 individuals we can estimate any percentage with the same level of error (a 5% margin of error with a confidence level of 95%). This is why sampling is such a powerful tool: it enables us to make extremely precise assertions about a large number of individuals by studying just a small number of them.

On the other hand, as we saw with the 100-person population above, sampling doesn’t work well with small populations. If there are ten students in my class, I need to learn each of their opinions to learn the class’s global opinion; I can't skip a single one. If we have a ten-person population and we don’t want to exceed our proposed level of error, we need to survey every individual.

ADVANTAGES AND DISADVANTAGES OF SAMPLING

Below, we’ve summarized the main advantages and disadvantages of using sampling to study a population.

Advantages:

- We don't have to study as many individuals, and we don’t need as many resources (time and money).

- Data manipulation is much simpler. If a 1,000-person sample is enough, why go analyzing a file with millions of records?

Disadvantages:

- We introduce (controlled) error to our results, since the nature of sampling requires generalization of results.

- We run the risk of biased results due to a poorly selected sample. For example, if individuals are selected for our sample in a nonrandom way, it could have a serious effect on our results.

THE SIMPLE RANDOM SAMPLE: DEFINITION AND ALTERNATIVES

The theory behind sampling is based on the concept of the simple random sample. In a simple random sample, individuals are selected from the population in a completely random fashion. This implies that all individuals have identical (nonzero) probability of being selected for our sample.

But theory is one thing and practice is another. It is only possible to select truly random samples in extremely controlled contexts. On the other hand, when we have populations made up of groups that are homogeneous (among themselves), we can take advantage of these groupings to improve the quality of our sample (or to reduce the necessary sample size).

In the next few posts, we’ll talk about different forms of sampling, starting with the two large umbrella categories: random and nonrandom sampling. See you then!

TABLE OF CONTENTS: Series on sampling

- Sampling: What it is and why it works

- Random and non-random sampling

- Random sampling: Simple random sampling

- Random sampling: Stratified sampling

- Random sampling: Systematic sampling

- Random sampling: Cluster sampling

- Non-random sampling: Availability sampling

- Non-random sampling: Quota sampling

- Non-random sampling: Snowball sampling

---

Download our Ebook The Essentials of Online Data Collection to learn about how online data collection works and provides guidance in making choices of how to prepare an online research project.