It seems that the most convenient way to predict results in the politics scenario is to follow polls. But can we actually trust them? Now this has become THE awkward questioning for pollsters.

I decided to bring this issue to the table since the political scene has been quite eventful lately and the intervention of the market research industry has been increasingly questioned because of the disparity between polls and final results. So, I wanted to scrutinize a little more about the whole thing, if only to give me some peace of mind.

The past months have been busy ones for politics in Europe. First we woke up on Friday June 24 to the shocking news that Brexit campaign had won the game at the polls, as Britain chose to leave the European Union, despite most believing that Remain was just going to nick it – according to the last ballots.

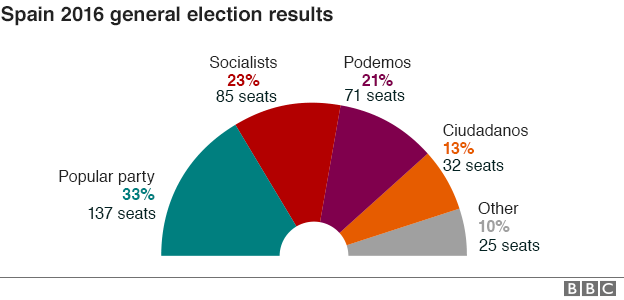

Only 2 days later we faced Spain's second general election, 6 months after the first one fractured the country. This time PP party picked up most seats but the deadlock remains since it’s short of a majority. Spain's other main party, the Socialist PSOE, got the second place, although the polls, had suggested that the far-left Unidos Podemos coalition would cause a historic breakthrough by pushing the socialist party into third place.

Both cases, also supported by the last year scene of more often imprecision in political polls, have been pretty traumatic experiences for the market research industry.

But let's go step by step to better understand the big picture.

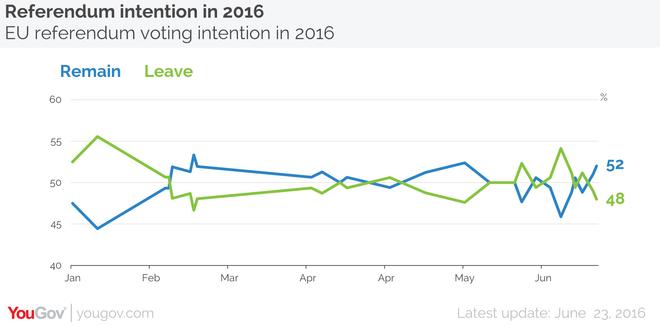

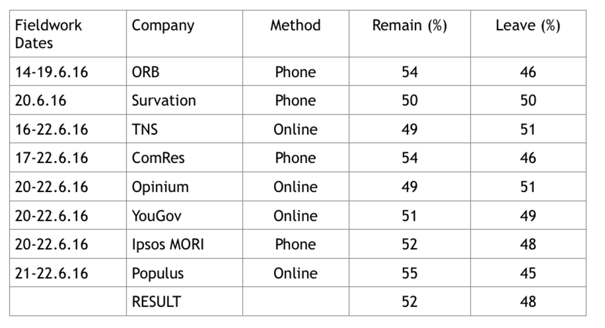

On the day before of the vote for UK European Union membership Referendum, latest polls showed the Remain side heading up. The market research company YouGov put Remain up by 52 % to 48 %. And as the voting ended on Thursday, Ipsos-Mori, another leading polling company, gave Remain an 8% lead over the Leave side. Even the U.K Independence Party’s leader, Nigel Farage, an unbendable euro-skeptic thought Britain had voted to stay in the bloc. Instead the ‘Kingdom’ voted 52% to 48% to leave the EU, with more than 17,4 million people voting to leave and about 16,1 million to remain.

Well, no one was quite expecting that - especially if you were up to date with ballots outcome - which of course has served to render questionable the reliability of political polls once again.

Sure guessing the likely result of this referendum was always going to be a challenge for the opinion polls. Way more complex that in a general election where they have years of experience, and yet, they make mistakes, as it’s been evident in certain occasions.

However, we’re talking about one-off referendum here, where not many patterns can be expected as it doesn’t occur regularly. An event that makes the pollsters' job considerably harder.

When looking up to what experts had to say about it I bumped into a very interesting reading, that among other things, points out how the difference between survey and vote outcome can be broken down into five terms:

- Survey respondents not being a representative sample of potential voters (for whatever reason, Remain voters being more reachable or more likely to respond to the poll, compared to Leave voters)

- Survey responses being a poor measure of voting intentions (people saying Remain or Undecided even though it was likely they’d vote to leave). Check out our paper When Should We Ask, When Should We Measure? A unique opportunity to compare what people say to what people do.

- Shift in attitudes during the last day

- Unpredicted patterns of voter turnout, with more voting than expected in areas and groups that were supporting Leave, and lower-than-expected turnout among Remain supporters.

- And, of course, sampling variability.

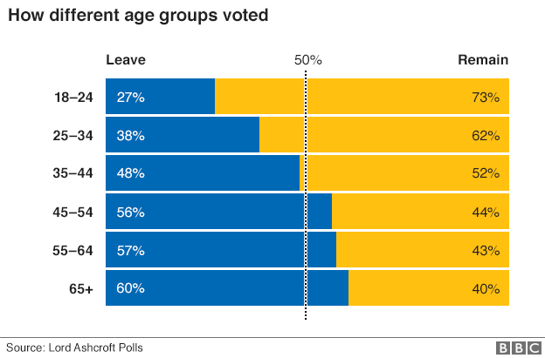

On the other hand, digging deeper, others suggest that the main division behind the debate comes from educational background beyond social class itself. Being immigration main part of the discussion, the position of Graduates tends to be more liberal, supporting Remain vote, while those with little or no educational qualifications tend to be more socially conservative, supporting the Leave vote. The age factor also plays a role in here, being young people those who tend to be more socially liberal. Such a predisposition was evidently exposed in the final results:

Any survey containing too many or few graduates risked to overestimate or underestimate the level of support to remain in the European Union.

Also, it’s important to take into account the polls behavior depending on telephone or online methods, where disparities are manifest. For most of the campaign, telephone polls showed the Remain vote outstripping with 55% to 45% the Leave vote. While in the online it showed 50/50. And then of course, there were the undecided, those who sometimes after pushing them a little bit more for a 'final' answer, would lean towards the Remain side, but that eventually would fluctuate in a space of uncertainty until the very last moment.

This seems to have been ignored by those who were confident that the Remain side would end up being the winning side. But in reality this divergence had already manifested itself the potential difficulty of estimating the referendum vote intention accurately.

Source: The Conversation

So yeah, it may occur that part of the error in the polls may be the result of decisions that pollsters made on adjusting their final data. Yet let’s not go too hard on these guys since it is undeniable that a referendum poll is really tough task too.

The one where Spain delivers more uncertainty for Europe just after Brexit

June 26 was Election day in Spain which, among its several headlines, left the resounding failure of the polls… again. All the surveys, reflected for weeks that left-wing protest party Unidos Podemos (Together We Can) would surpass more or less the socialist party PSOE. But reality slapped public opinion survey companies in the face: The Socialists maintained the second place, surpassed Pablo Iglesias’ party for 14 seats and the right-wing conservative party PP achieved a wider-than-expected victory, although still without a majority. Why were all them wrong?

Experts agree that it’s due to overestimating the value of Podemos vote, which unbalanced everything else. As mentioned previously, respondents occasionally express some intentions that ultimately are not met. And that's what happened this time with the potential left-wing party voters. Likewise, 20% of their supporters resulted in a huge abstention.

So, in fact, Podemos voters’ opinions were collected "faithfully" however it was not a loyal electorate but a volatile one.

In the same way, it should not be overlooked the impact from the results of the decision in favor of Brexit, which took place just a couple of days before J-26. It is possible that voters were turned off more radical parties, after the UK voted for to leave the European Union – and made Spain's fragile economy tremble. Political analysts consider the referendum’s results strengthened Mariano Rajoy’s party and clearly harmed the new ones, especially Unidos Podemos. Either way, complex negotiations are still ahead.

In a nutshell, the exact reason why the polls stumble often remains a mystery. Experts ponder some potential causes such the likely-voter models may not reflect the actual electorate; the initial samples could be biased or voters could make last-minute decisions that make even an accurate poll go wrong on Election Day. Moreover, as there’re self-fulfilling prophecies, with surveys may occur the opposite: when it comes to an outcome prediction, supporters of the result predicted can demobilize, or even vote the opposite to punish somehow their preferred party, as they might think that this punishment will have no consequences. Though, this certainly can change the outcome, causing the inaccuracy of the survey in certain occasions.

What is clear is that every time that Media publishes a political poll, social media goes wild, street talks get real and political parties tremble. Actually, there's more interest in politics in general. And the truth is that it’s becoming more complicated because the social, economic and political scenario is getting highly unpredictable.

What can we say? This is just how the difficult and beautiful science of scanning political opinion goes. And brace yourselves, US storm is coming soon.